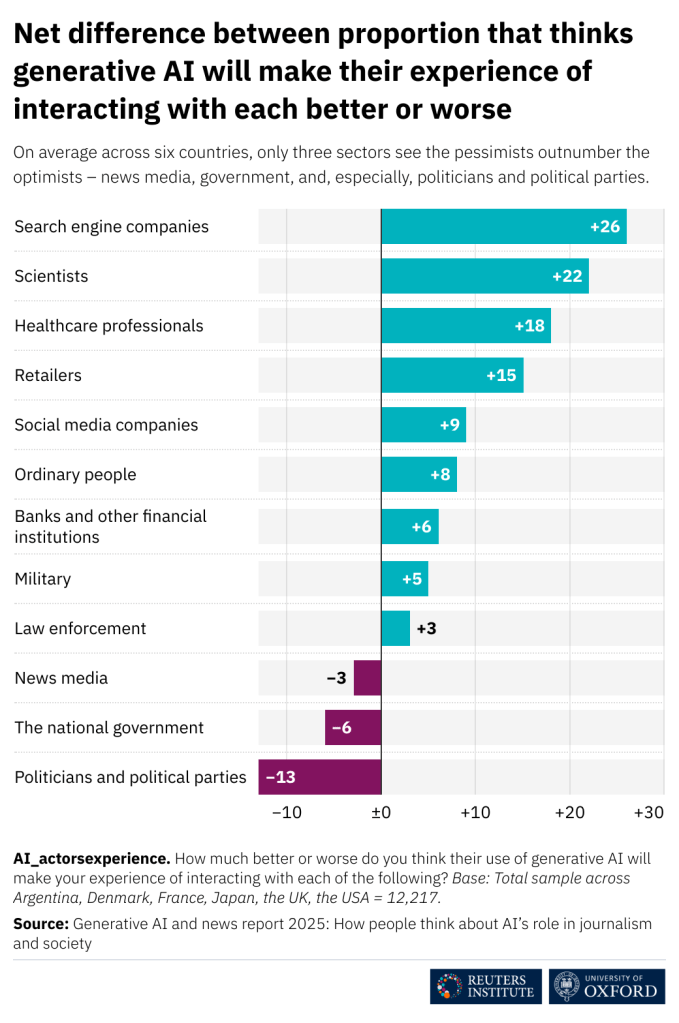

How do people think different sectors’ use of generative AI will change their experience of interacting with them?

That’s one of the question we fielded in a new survey, and one of the three key findings from my perspective – looking across the six countries covered, there are more optimists than pessimists for e.g. science and healthcare, and for search engines and social media, but more pessimists than optimists for news media, the national government, and – especially – politicians and political parties.

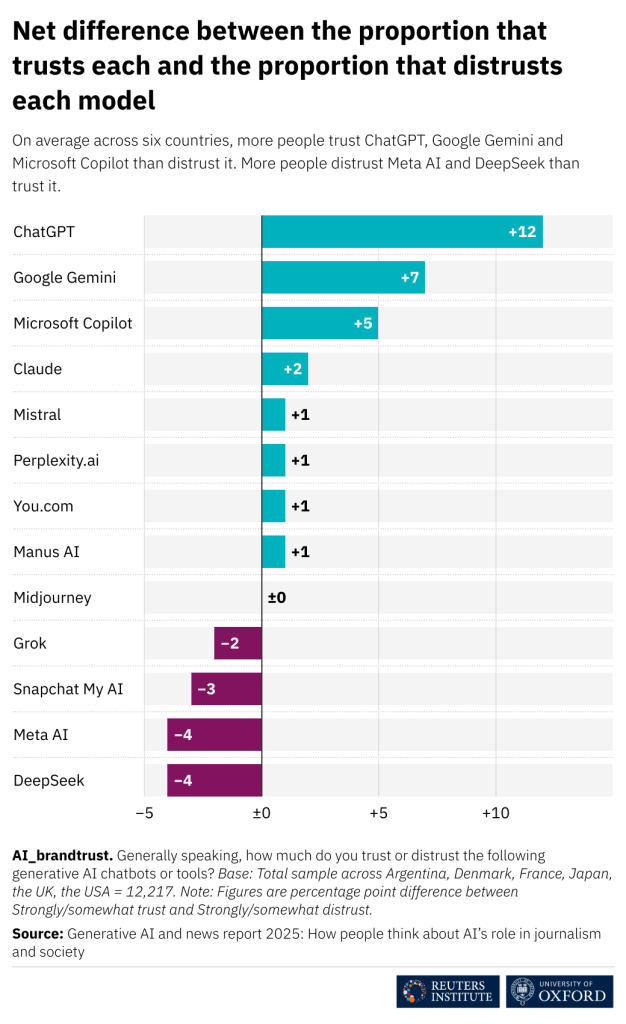

Elsewhere in the survey, we ask whether people trust different generative AI offers – the picture is very differentiated, with net positive trust scores for e.g. ChatGPT, Google Gemini, and Microsoft Copilot, but negatives for those that are seen as part of various social media companies.

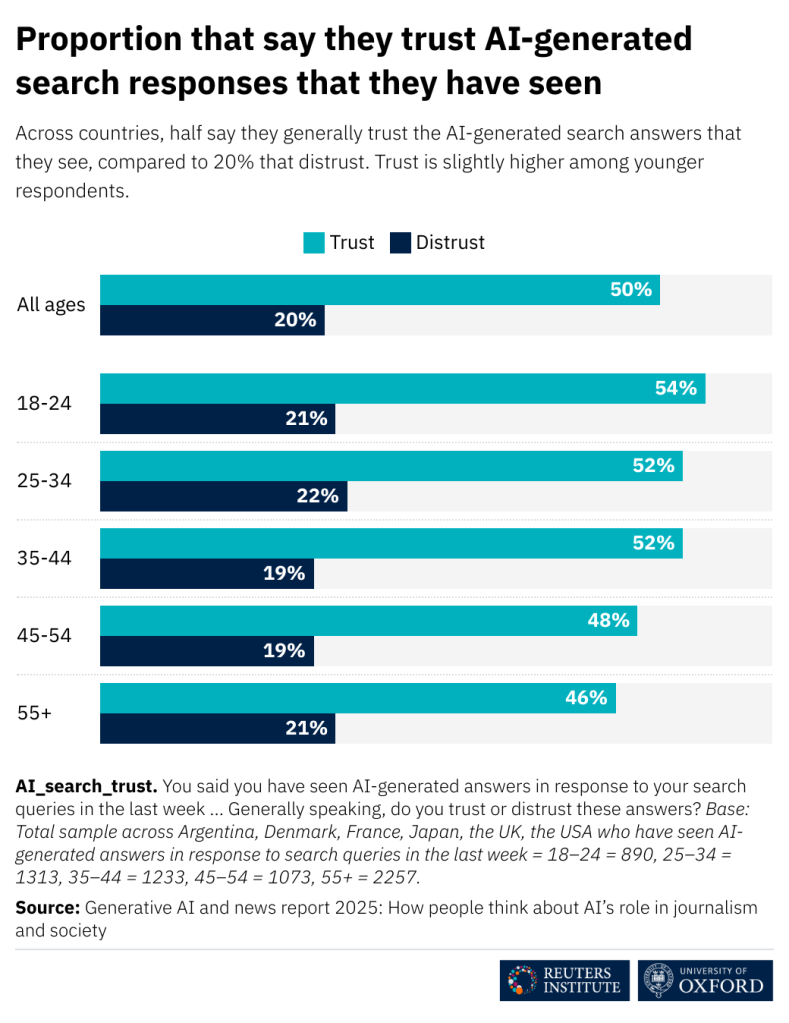

Finally, as search engines increasingly integrate AI generated answers, and more and more of us see these all the time, we asked about trust in these answers – the trust scores are high across the board, with higher net positives than any of the standalone tools. (With this and the question above, surveys do not measure whether the entities in question are trustworthy, and do not tell us anything about whether people should trust them, they provide data on whether they do trust them.)

Beyond that, the report, which I wrote with Felix Simon and Richard Fletcher, and which is published by the Reuters Institute for the Study of Journalism, is chock full of fresh data on generative AI use (basically doubled since last year), what people use these tools for (increasingly for information, presenting a very clear direct competition to search engines), and what they don’t (yet) use them for all that much (getting the latest news).